Baking the Y Combinator from scratch, Part 2: Recursion and its consequences

It's a pedagogical pet peeve of mine when technical concepts are simply listed in their fully developed state, with little in the way of motivation, derivation, or historical context. This, I think, is like publishing a cookbook filled with beautiful pictures of cakes, but no recipes.

My aim with the "Baking <x> from scratch" series is to describe not only what, but how and why, with the hope that this will lead to a richer and more durable understanding.

(You should read the prequel to this article before you read this one, or it won't make any sense!)

Last time we looked at the Y combinator, I said:

Confronted with this definition, you might ask yourself:

1. Why does it look like that?

2. What is it used for?

3. Why do people still talk about it?

4. (What the heck is a combinator?)

The one-line answer to all of these is: "the Y combinator implements recursion in functional languages that don't allow for explicit self-reference", but it's worth going a little deeper than that. In my opinion, the easiest way to understand what the Y combinator is, at a fundamental level, is to focus on how it produces fixed points. The easiest way to understand why it's important is to focus on how it can be used to implement recursion. In Part 1 we'll do the former (answering 1. and half of 2. in the process) and in Part 2 we'll do the latter (answering 3. and the other half of 2.)

(We'll answer bonus question 4 later in this article!)

Last time we examined the specific mechanism the Y combinator uses to "self-replicate", and learned a bit more about it in the context of formal systems. Now we'll take a look at it from a viewpoint which may be more familiar to you: we'll examine how it can be used to implement recursive functions (and why that matters.)

Part 1 had a mathematical bent to it; Part 2 will be more computationally focused.

Whence recursion?

We've established that the Y combinator produces fixpoints. If you'll remember, that means:

$$f(Y(f)) = Y(f)$$

and also:

$$Y(f) = f(Y(f))$$

We subsequently launched into showing exactly why this second equation is true, but I wouldn't blame you if you left that section with some nagging questions. Questions like:

- It's straightforward to show that the Y combinator satisfies both of the equations above, but does the Y combinator really produce the fixpoint of any unary function? That seems way too easy. Clearly not every function has a fixpoint, so how can we claim it finds the fixpoint of any function?

- I've heard that you can use the Y combinator to implement recursive functions. What does this fixpoint stuff have to do with recursion?

I'm going to address both of these questions at the same time, because as it turns out their answers are connected!

You may have heard of Hilbert's Hotel, a famous thought experiment proposed by David Hilbert in 1925. Hilbert's Hypothetical Hotel contains an infinite number of rooms, numbered 1, 2, 3, and so on, but never stopping.

Hilbert asked: if the hotel is full, must it turn away all new arrivals? The answer, is, in fact, no - to accommodate someone new, every guest can move from their room to the room with the next highest number. Since the hotel is infinite, every guest can do this; there is no guest who will be left without a room to go to. At the end, room 1 will be free for the newcomer. Furthermore, since every room is occupied both before and after the new guest checks in, it's reasonable to say their arrival did not change the number of guests at this infinite hotel.

Using an analogous argument, I'm going to claim that if we have an infinite chain of \(f\)s:

$$f^\star = f(f(f(...)))$$

Then \(f(f^\star) = f^\star\). Every \(f\) in \(f(f^\star)\) can be lined up exactly with an \(f\) in \(f^\star\), so it's reasonable to claim that the two are the same, since they consist of identical terms. The reason we can say so confidently that the Y combinator finds fixpoints for any function is because an infinite chain of nested \(f\) calls is always a fixpoint for \(f\)!

That's cheating, I'm calling you out

That's a fair reaction, but before you indict me for misleading you let me make an observation:

This works because we're using the untyped lambda calculus, specifically. In the untyped lambda calculus there are technically no datatypes, and there are no restrictions on what can be passed to what. You may be thinking of dynamic languages like Python when I say that, but this goes even further. In Python if you try to (for example) divide two strings you'll get an exception, but in the untyped lambda calculus you can pass an arbitrary term into a function that is built to, say, operate on Church numerals, and what you'll end up with is...garbage (or nontermination, eek!) The result (if there is one) will be a term, but it won't necessarily represent anything. That's why Y can "find" "fixpoints" for functions that clearly shouldn't have any, like \(f(n) = n + 1\). The fixpoint technically exists, but it's not a number (it's not even a value, really.)

We proved \(Y(f)\) is equivalent to \(f(Y(f))\), so we know it does represent a fixpoint of \(f\) - it just might not be the type of object that \(f\) is supposed to operate on. Also, it might be infinitely large and not constructable in a finite amount of time.

Saying that Y produces fixpoints is starting to feel like an abuse of terminology

Admittedly the situation is very weird, but there is one case in which this wonky fixpoint behavior makes a lot of sense and comes very much in handy.

On its own an infinite chain of nested \(f\)s isn't very interesting or practical, but we've been assuming so far that \(f\) operates a single argument. Technically it has to take a single argument (since functions in the lambda calculus only take one argument) but we can functionally make it take more by using a technique called currying (yes, named after Curry, of Curry's Paradox fame. He was a prolific guy!)

A curried function simulates a function with two or more arguments using nested one-argument functions. The easiest way to explain is to show you an example:

$$c = \lambda x. \enspace (\lambda y.\enspace x\enspace y)$$

The function \(c\) takes one argument \(x\) and returns another function that takes one argument \(y\). When you supply an argument to the second function, it applies \(x\) to it and returns the result. This is equivalent to a function that takes two arguments and applies the first one to the second one. You can nest functions as deep as you want to simulate a function that takes any number of arguments.

Let's look at an example written in Javascript instead of in the lambda calculus:

const startAdd = (arg1) => {

const finishAdd = (arg2) => {

return arg1 + arg2;

}

return finishAdd;

}startAdd takes a single argument, and returns a function that also takes a single argument. When you supply an argument to the second function, it adds the two arguments together and returns the result. An advantage of doing things this way is that you can supply only a partial list of arguments, and then supply the rest later, at your leisure. A simple example - you could do const addThree = startAdd(3), and then add three to any number n by doing addThree(n).

To pass two arguments to a "regular" function, you do something like add(firstArg, secondArg). To pass two arguments to our curried function, you do startAdd(firstArg)(secondArg).

Now, let's consider what happens when \(f\) happens to be curried. Let's say we write it to take two arguments - the first argument is a function and the second is something else (a number, perhaps). We know that:

$$fix(f)(x) = f(f(f(f(f ...))))(x)$$

So calling \(fix(f)\) on \(x\) actually calls \(f\) with two arguments - the second is \(x\), the first is an infinite \(f\) chain.

But wait - that chain of \(f\)s isn't computable, right? Doesn't it take infinite time to evaluate?

It does, but only if you evaluate it all at once. We dodge the problem in this case because of a handy property of the lambda calculus, which is that it's lazily evaluated.

Lazy evaluation, also known as normal order evaluation, always evaluates the leftmost, outermost function first. This is another way of saying that a function is always evaluated before its arguments, which is another way of saying that arguments are evaluated when they're used in a function body and not before. This is in contrast to eager or applicative order evaluation, which always evaluates the function's arguments before evaluating the function itself. (Lambda calculus terms are, to be more precise, evaluated lazily by convention. You could evaluate a term using eager evaluation, but - in the untyped lambda calculus, at least - you may get different behavior. For example, in some situations evaluation will complete if you do it lazily, and run forever if you do it eagerly.)

Evaluating our chain lazily means that when we evaluate \(f(fix f)\), that \(fix f\) only expands when \(f\) receives its second (curried) argument, \(x\). In other words, \(fix f\) only reduces one step at a time, and not all at once in a way that would make our computation blow up.

This leads us to the big reveal, the connection between fixpoints and recursion: in the lambda calculus, any recursive function is easily expressed as a fixpoint of a regular function that accepts a lambda telling it what to call next (eagle-eyed readers may recognize this as a special case of the continuation-passing style.)

If this hasn't clicked yet, that's okay - I bet it will when you see it in action. We'll implement \(factorial\) (the Drosophila of recursive functions.) I'll write this in pseudocode to spare you from having to read any more lambda terms.

Let's start with the pre-\(fix\) version:

$$preFact\space callback\space arg = return\space 1\space if\space arg == 1\space else\space return\space arg * callback\space arg - 1$$

If \(arg\) is 1 (the base case) we return without calling \(callback\). Otherwise we make a call to \(callback\) using our new \(arg\), which is a little bit smaller than the last one. Now let's make this function recursive:

$$factorial = fix\space preFact$$

Pretty easy! Now \(factorial\) is equivalent to \(preFact(preFact(preFact(preFact(...))))\), but since the lambda calculus is lazy, it doesn't evaluate any farther than \(preFact(fix\space preFact)\) when we provide it with an argument.

Let's look at what happens when we evaluate \(factorial\space 4\):

$$factorial\space 4 = preFact(fix\space preFact)\space 4$$

$$= 4 * fix\space preFact\space 3$$

$$=4 * preFact(fix\space preFact)\space 3$$

$$=4 * 3 * fix\space preFact \space 2$$

$$=12 * preFact(fix\space preFact)\space 2$$

$$=12 * 2 * fix\space preFact \space 1$$

$$=24 * preFact(fix\space preFact)\space 1$$

$$=24 * 1$$

$$= 24$$

\(fix\space preFact\) expands as needed, and is simply thrown away when we hit the base case and don't end up needing to call it.

If you take away anything from this section, it should be that the fixpoint of a "regular" function is an infinitely nested function call - a function call that is passed another function call identical to itself via its argument. In the right circumstances, this can produce recursion that terminates and can be used for practical purposes. That's why everyone seems to care so much about fixpoints.

Why recursion?

So we can use the Y combinator to make recursive functions, which is great, but it's been a hundred years. Why is that so important that we're talking about it a century later?

It's not so much that the Y combinator is special by itself as much as it's recursion that's special. In the context of real-world programming, recursion is mostly a strategy for dealing with data, especially things like trees or linked-lists, but it has a rich and storied history in computer science - in fact, it's deeply connected to the very idea of computation.

Computable functions, as a mathematical concept, resemble the pure functions or subroutines we find in our programs - they turn inputs into outputs in a finite number of steps that only involve the natural numbers. The mathematical concept of a function in general is a bit broader: a function is basically a (often infinite) table of inputs to outputs. This suggests that maybe there are functions that are technically functions, but aren't computable.

It's actually not hard to come up with these; consider \(f(x) = \pi^x\). Since the output of this function is always irrational, it takes an infinite amount of time to produce it only using the natural numbers (you can, of course, produce arbitrarily accurate approximations of it in a finite amount of time.) This is kind of cheating, though, since technically we're looking at a function with an infinite output. (Are there functions that have finite inputs, finite outputs, and finite descriptions, that are still not computable? Yes, and I'll show you one in a few sections.)

For now, we're left with the question: what distinguishes computable functions from other functions? Are there any properties unique to computable functions? This is where recursion enters the picture. I'll ask you to bear with me for a bit, as the path from recursion to computability is a bit circuitous.

Like much of the material we've discussed so far, historical mathematical interest in recursion and recursive functions has its roots in logic and formal systems - particularly in attempts to formalize the laws of elementary arithmetic as simply as possible, without the use of existential quantifiers. It was soon discovered that it was possible to recursively define fundamental operations on the natural numbers (and by extension, the integers, the rationals, and so on.) Let's take a look at a few examples:

First, we'll note that natural numbers themselves are fundamentally recursive structures, insofar as every natural number is either \(0\) or the successor to another, smaller natural number. If we define \(S\) as the function that takes \(n\) to \(n + 1\), we can say \(1 = S(0)\), \(2 = S(1)\), and so on.

Building on that crucial observation, we can define a number of different operations recursively. Addition, for example, looks like this:

$$n + 0 = n$$

$$n + S(m) = S(n + m)$$

This definition has several similarities to a recursive function in a programming language. There's a base case, for example, and the recursion itself involves applying the same operator to a smaller subproblem (since \(S(m)\) is strictly greater than \(m\).)

We can define multiplication in a similar way:

$$n * 0 = 0$$

$$n * S(m) = (n * m) + n$$

And going one level further, exponents:

$$n^0 = 1$$

$$n^{S(m)} = n^m * n$$

Something that I'll call back to later: you'll notice that addition, multiplication, and exponentiation all seem to follow the same "pattern". Adding \(x\) to \(y\) is doing \(S(x)\) \(y\) times, multiplying \(x\) by \(y\) is adding \(x\) to itself \(y\) times, and raising \(x\) to \(y\) is multiplying \(x\) by itself \(y\) times. You can imagine a "super-exponent", where "super-raising" \(x\) to \(y\) is raising \(x\) to itself \(y\) times - for example, 2 "super-raised" to 4 would be \(2^{2^{2^2}}\).

Extending this process forever yields the hyperoperation sequence, where each operation entails repeated application of its predecessor. In general, the \(n\)th operation in the hyperoperation sequence is written as \(x[n]y\), so \(x[0]y = y + 1\), \(x[1]y = x + y\), \(x[2]y = x * y\), \(x[3]y = x^y\)...

Notice that each \(x[n]y\) features \(n\) nested levels of recursion.

If we define a predecessor function like so:

$$Pred(0) = 0$$

$$Pred(S(n)) = n$$

We can even do truncated subtraction:

$$n - S(m) = Pred(n - m)$$

It turns out you can do a substantial amount of mathematics using these kinds of definitions. But that raises the question of what, exactly, counts as "these kinds" of definitions. The formalism we use today to determine what counts as a recursively defined function was introduced by the logician Kurt Gödel in 1931. A recursive function is a function on the natural numbers that can be built up using these predefined building blocks (I won't be completely strict in presenting these but hopefully it's enough for you to get the idea):

- The constant functions \(C_n\) return the natural number \(n\), no matter what their arguments are.

- The successor function \(S\) takes a natural number \(n\) and returns its successor - i.e. \(n + 1\)

- The projection functions \(P_i\) take a list of arguments and return the \(i\)th argument.

- The composition operator \(\circ\) works in the familiar way - i.e. \((f \circ g)(x)\) = \(f(g(x))\)

- Finally, there's the recursion operator \(\rho\). This allows you to construct a function \(f\) by specifying two other functions \(g\) and \(h\). \(g\) handles the base case \(f(0)\), and \(h\) turns \(f(n)\) into \(f(S(n))\)

Soon after these kinds of recursive functions were rigorously formalized, their name was changed from the plain ol' recursive functions to the primitive recursive functions. Why primitive? Well, it turns out these "recursive" functions don't completely capture the idea of recursion. To show you what I mean, I'm going to introduce another function, \(T\). \(T\) is defined like this:

$$T(b, 0) = b + 1$$

$$T(0, 1) = 2$$

$$T(0, 2) = 0$$

$$T(0, n) = 1 \enspace \textnormal{(for n > 2)}$$

$$T(b, n) = T(T(b-1, n), n-1)$$

This recursive definition looks complicated, but actually it turns out \(T(b, n) = 2[n]b\) (which you'll just have to take on faith for now. You can run through some sample calculations to convince yourself this is true, or better yet, write a program. I'd love to prove it to you but I just don't have the space.) This function is a variant of the generalized hyperoperation sequence (which itself is a relative of the Ackermann function.)

\(T\)'s definition clearly aligns with our intuitive notion of a recursive function, and you can easily implement it in any programming language, but it's not primitive recursive. Here's why:

We'll define \(T^\star(x)\) to be \(T(x, x)\) and sketch a proof that \(T^\star\) is not primitive recursive. Since \(T\) can easily be used to implement \(T^\star\), if \(T^\star\) is not primitive recursive, \(T\) clearly can't be either.

Let's consider a sequence of primitive recursive functions \({f_0, f_1, f_2, ...}\) where each \(f_n(x)\) is a canonical example of an unary function with \(n\) nested recursions - for our purposes, that's \(2 [n] x\). It's clear that \(f_{n+1}\) always grows faster than \(f_n\). I'll also assert, but won't prove, that for every primitive recursive function \(r\) there is some \(f_n\) in this sequence such that \(f_n\) grows faster than \(r\) (hopefully you can convince yourself this is true.)

Now, it's clear that any primitive recursive function purporting to be \(T^\star\) must grow at least as fast as \(T^\star\). We can easily prove that \(T^\star\) cannot be primitive recursive by pointing out that since \(T^\star(n+1) = f_{n+1}(n+1)\), \(T^\star(x)\) grows at least as fast as \(f_{n+1}\) for \(x >= n + 1\). This means for any \(f_n\), \(T^\star(x)\) grows faster than \(f_n\) for \(x > n\), which means that \(T^\star\)'s (asymptotic) rate of growth is greater than any primitive recursive function. For a primitive recursive function to keep up, it would need to have infinite nested recursions, which is not possible.

The solution to this problem was to add one more operator alongside successor, projection, composition, and recursion. This is the minimization operator \(\mu\), which searches upwards for the smallest natural number that satisfies a certain condition (technically it's the smallest number that makes a function of your choosing return 0. If no such number exists, \(\mu\) runs forever.)

The set of functions we can define using the primitive recursive operators plus \(\mu\) is called the set of general recursive functions (sometimes called \(\mu\)-recursive functions.) General recursion is enough to cover all the functions we'd intuitively call recursive - \(T^\star\) is general recursive, for example - but the easiest way to prove that is to prove that the concept of general recursion lines up exactly with our notion of computation.

Turing-Completeness

The concept of a general recursive function was fully formulated around the mid-1930s. By the end of that decade there were three major independent notions of a "computable function" (among many others) - Turing Machines, terms in the Lambda Calculus, and general recursive functions. It was pretty quickly proved that these were all equivalent: any general recursive function can be ported to the Lambda Calculus or turned into a Turing Machine, and vice versa. The famous Church-Turing thesis says that all three of these concepts (and other equivalent formulations) completely encapsulate the idea of computation.

It's worth noting that "computation" was a fuzzy notion before the Church-Turing thesis; the consensus was that a computing a function involved applying a finite number of mechanical steps that required no human judgement or intuition - but no one really specified what "mechanical" meant in a precise way. The Church-Turing thesis is the claim: "these formal criteria effectively embody the informal idea of computation." This is, ultimately, an opinion (albeit a widely accepted one), so it can't really be proven or disproven. This is why the Church-Turing thesis is a thesis and not a theorem!

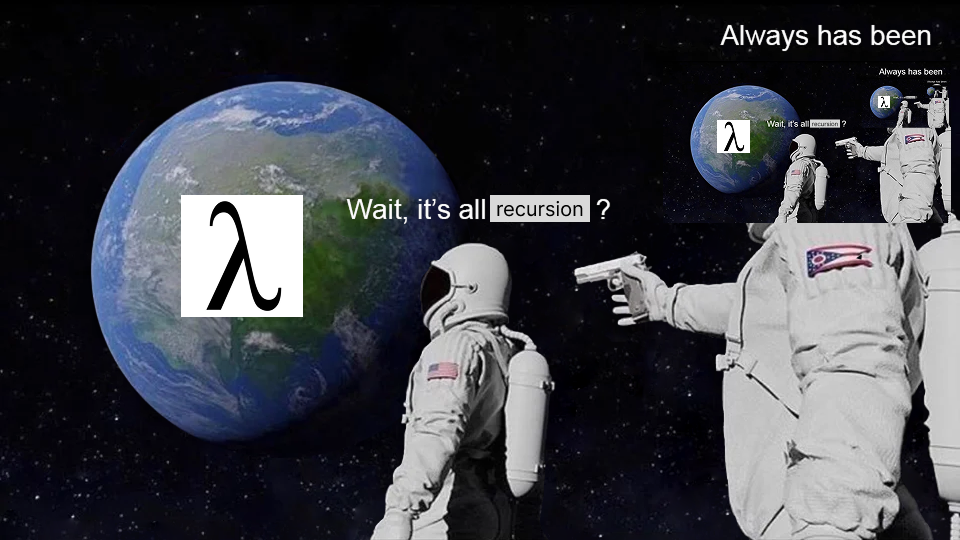

You may find it surprising that computation is actually recursion in a trenchcoat. To help convince you that this is true, we'll sketch a proof that the set of all recursive functions is equivalent to the set of all lambda-definable functions, i.e. the set of all functions that can be written as terms in the Lambda Calculus (the proof of the recursion \(\leftrightarrow\) Turing Machine link is analogous.)

-

Every recursive function can be represented as a lambda term (in other words, the set of all recursive functions is a subset of the set of all lambda definable functions.)

- We can prove this by porting the "building blocks" discussed in the last section to the Lambda Calculus:

- We can use Church encoding to represent the natural numbers.

- The successor, projection, and composition functions are all fairly easy to implement (you can look these up or try to write them yourself!)

- To implement primitive recursion, we can use (drum roll...) the Y combinator! We can also use the Y combinator to implement minimization, by starting our parameter at 0 and incrementing it instead of decrementing. The recursion will terminate when we find the condition we're looking for...or it won't and we'll run forever!

-

Every lambda term can be represented as a general recursive function (in other words, the set of all lambda-definable functions is a subset of the set of all general-recursive functions.)

- This direction is more involved, so I'm going to appeal to your intuition rather than try to make an ironclad argument.

- Being a formal system, evaluation in the lambda calculus proceeds according to a finite set of rewrite rules. Applying a rule means:

- Parsing the current term and creating some sort of parse tree.

- Figuring out which rule to apply next, and which sub-term to apply it to. This can be done by crawling the parse tree and doing pattern-matching as necessary. (Note that the particular choice of term and rule depends on the evaluation strategy we choose to use. In all cases, however, the choice is always deterministic.)

- Applying the appropriate rewrite rule by replacing part of the parse tree with a newly generated subtree.

- All three steps above can be accomplished by general recursive functions.

- Rewrites continue until no more writes can be applied, at which point evaluation finishes. We can use the minimization operator to continue rewriting until no further rewrites can be applied (if we never reach that point, of course, the function will keep computing forever.)

Put informally, the idea behind the proof of the lambda \(\rightarrow\) recursion direction is that it's possible to implement an interpreter for the lambda calculus using a general recursive function. Once we have that, we can create a general recursive function for any lambda-definable function by simply passing the appropriate lambda term to our interpreter!

But wait - we said earlier that general recursive functions only operate on the natural numbers, so how can we use them to process lambda terms?

The answer is by using Gödel Numbering. This is basically the opposite of Church encoding, where we use plain old numbers to represent sequences of symbols in an arbitrary formal system - our interpreter then manipulates these numbers, instead of the symbols themselves. An interpreter that did this would no doubt be very complicated, but remember that we don't have to actually construct such an interpreter. We don't even have to design it - all we have to do is prove that we can make it out of general recursive functions.

If two sets are subsets of each other, they must be equal - so the set of lambda-definable functions is exactly the set of general recursive functions!

What does this tell us about recursion in particular? It tells us that a system or language that can compute general recursive functions can compute any computable function at all. In other words, it can compute any function computable by a Universal Turing Machine - this is what we mean when we say a language is "Turing-Complete." Every Turing-Complete language is exactly as powerful as every other one, and in a sense they are all maximally powerful, in that they all can compute anything that can be computed (some systems can compute things faster or more "elegantly" than others, but they can all get the computation done.)

...but at what cost?

Recall from the last article the notorious Curry's Paradox, which showed that the untyped lambda calculus permits us to write down terms which are "badly-behaved." This is an informal notion, but in the context of the lambda calculus a "badly-behaved" term reduces forever.

With a hundred years of hindsight it's pretty obvious you can write programs that run forever; we saw another simple example of such a term when we looked at the Omega Combinator. But you can also write programs that run forever in Python, or Java, or Ruby, or...almost anything, really. Is that actually a problem?

Like always, it depends on your point of view. In practical programming, nontermination isn't desirable, but it's usually easy to tell when code is at risk for it (for example, when there's a while(true), or when you're calling a graph that may have cycles.) It's also easy to tell when it's happening, because it brings everything to a halt in a way that other bugs usually don't. However, from a theoretical point of view it does have pretty notable implications, particularly because if your computation never finishes the thing you're trying to compute isn't computable. It's not necessarily a crisis that some programs will never terminate, but it's at least worth exploring why it happens, and whether there's anything we can do about it.

One simple question we can ask is: what are the conditions for non-termination? A function with an infinite loop (or a recursive function that has no base case) will obviously run forever. What other cases are there? Are there any tests we can do to determine if a program will terminate?

We're not the first to ask this, of course. It is in fact a very fundamental question in computer science, known as the Halting Problem. A function \(H\) that solves the Halting Problem takes a program as its input and returns \(true\) if and only if that program will terminate when you run it, and \(false\) otherwise. We know that \(H\) exists in the abstract. Since every program either halts or doesn't, there is some infinitely large table that maps every possible program to a boolean indicating whether the program halts. But can we compute \(H\)?

No, and here's why:

Let's say you come up with an implementation for \(H\) and you're certain it's correct. "People thought this was impossible, but I'm built different", you say. We'll take your \(H\) and use it to write a different function, \(G\).

We'll implement \(G\) according to the following Javascript-y psuedocode:

const G = (targetFunction) => {

const halts = H(targetFunction(targetFunction))

if (halts) {

while (true) {

// run forever

}

}

return;

}\(G\) takes a program and uses \(H\) to determine if that program halts when applied to itself. If it does halt, \(G\) loops forever. If it doesn't halt, \(G\) returns immediately.

If the structure of \(G\) seems kind of arbitrary, consider this: what is \(H(G(G)))\)?

- Let's assume it's \(true\). \(G(G)\) would take \(G\) and use \(H\) to see if it halts when applied to itself. By assumption \(H\) must return \(true\) in the body of \(G(G)\). So then \(G(G)\) would enter an infinite loop...which means it doesn't halt. Oops, guess it can't halt.

- Let's take the other side. If \(H(G(G))\) returns \(false\), \(G(G)\) will return immediately, which means it does halt (by the way, is this construction starting to look familiar?)

Once again we've arrived at a paradox. But in this case it might not be clear what the implications are. After all, \(G(G)\) is a program that we can write down, and it will either halt or it won't; it can't be in some weird superposition of halting and not halting. What does this paradox really mean?

Well, it means that \(H\) is going to get the answer wrong when we use it on \(G(G))\) (or it will run forever, which disqualifies it anyway.) It has to get the answer wrong, always, by the logic above. If it seems like we could maybe "patch" \(H\) to get around this, consider that changing \(H\) changes the implementation of \(G(G)\), which may well change whether \(G(G)\) halts or not. It's a moving target that we can never catch up to.

This proves that \(H\) is undecidable, which is another term for "not computable." You'll notice that we have also proved that \(H\) cannot be defined recursively!

(It turns out there is a lot of interesting deterministic static analysis that we know is impossible to do for Turing-Complete languages, because it could be used to solve the Halting Problem. Usually we can kind of fudge it by doing a weaker or incomplete version of the analysis, but we can't do it in full generality on every possible program.)

The undecidability of the Halting Problem shows that there are functions that seem computable, but aren't. More surprisingly, it also shows there's no way for us to tell in advance which programs are going to terminate and which will run forever.

That's too bad.

Well, let's think for a bit. If we're using these languages to understand computation better, it's in our interest to get a really good understanding of the cases where computation breaks down. One way to do this is to try and construct a language that doesn't allow for nontermination - what would that entail?

Allow me to (informally) introduce the simply-typed lambda calculus. It does indeed have types, and they are indeed simple! So simple, in fact, that I can write down all the rules here:

- Every "bare" (i.e. free) variable has the same type, which is \(\iota\) (that's the Greek letter iota)

- A lambda term's parameter has to have a type annotation which says what type it is. This looks like (for example):

$$\lambda x:\iota. \enspace x $$ - Every lambda term has a function type \(\sigma \rightarrow \tau\), where \(\sigma\) is the type of the lambda's parameter and \(\tau\) is the type of the lambda's body. For example,

$$\lambda x:\iota. \enspace x $$

has a function type of \(\iota \rightarrow \iota\), and the term

$$\lambda x:\iota. \enspace \lambda y:\iota \rightarrow \iota. \enspace y\enspace x$$

has a type of \(\iota \rightarrow (\iota \rightarrow \iota) \rightarrow \iota\)

This probably seems very basic - so basic, in fact, that you may doubt it does anything at all. Hold that thought while we add types to the Y combinator.

To refresh your memory, the Y combinator looks like this:

$$ Y = \lambda f. \enspace (\lambda x. \enspace f(x \enspace x)) \enspace (\lambda x. \enspace f(x \enspace x)) $$

We'll determine what its type should be, adding type annotations as necessary along the way. The most sensible way to do this is from the bottom up, so let's start by looking at \(\lambda x. f(x \enspace x)\). In fact, let's start even simpler and just look at \(\lambda x. x \enspace x\). What type annotation should we use for \(x\), and what is the type of the entire term?

Well, we apply \(x\) to itself, so we know it must have a function type, i.e. a type of the form \(\sigma \rightarrow \tau\). What should \(\sigma\) be? Well, it should be the type of the argument it gets (i.e. the type of \(x\)), so expanding we get:

$$\sigma \rightarrow \tau$$

$$(\sigma \rightarrow \tau) \rightarrow \tau$$

$$((\sigma \rightarrow \tau) \rightarrow \tau) \rightarrow \tau$$

$$...$$

Oh...we can't type the Y combinator. Types, like terms, must be finite, which means we can't apply a term to itself while obeying the type rules. So the Y combinator doesn't exist in the simply typed lambda calculus; it's not a legal term. The omega combinator isn't either. In fact, you can't write any fixpoint combinator down without breaking the typing rules.

On the bright side, it can be proved that the simply typed lambda calculus is strongly normalizing (although we don't have space to go over the details.) What this means is that if you keep applying rewrites to an arbitrary term, you will always eventually reach a point where you can no longer apply any more rewrites (the form the term ends up at the end in is referred to as that term's normal form. In the simply typed lambda calculus, the normal form of a given term is always the same, no matter which rewrites you apply and the order in which you apply them!) This property means that any program written in the simply typed lambda calculus is guaranteed to terminate, just like we wanted!

Note that the exclusion of the Y combinator protects against things like Curry's Paradox. In fact, Alonzo Church introduced the simply typed lambda calculus in 1940 for precisely this reason.

I alluded to this once before: types are a commonly used antidote to paradoxical constructions, not only in programming languages, but in formal systems like set theory as well.

This strong normalization property may seem like a contradiction; didn't we just prove that we can't filter out non-terminating functions ahead of time? Yes, and there is in fact a catch - the simply typed lambda calculus is, in a sense, too restrictive.

Consider: no fixpoint combinators can be typed in this system. This means that recursion (even primitive recursion) is unavailable to us in general; while some very restricted types of recursive functions will typecheck, most won't - even ones that clearly terminate (for example, we can express multiplication but not exponentiation!)

Our new termination-free system filters out the misbehaving programs you can write with the untyped lambda calculus, but it precludes a lot of legitimate programs too. This suggests a tradeoff - you can have a system that is predictable and well-behaved, but there will be many things you can't do in that system. On the other hand, you can loosen the reins to make your system more expressive and powerful, but in exchange you will incur paradoxes, or functions that run forever, or other things that make the system break down.

You might be wondering if this always has to be the case. Do you always have to admit bad behavior if you want maximum power? This is a question we happen to know the answer to, which is: "yes, unequivocally!" This was very conclusively proved by Gödel (yes, I've been talking about him a lot) in one of the most significant (perhaps the most significant) results of the early 20th century. Once a formal system reaches a certain level of power, it is always possible to somehow construct a paradox in it. The way Gödel proved this is out of the scope of this post, but I encourage you to check it out sometime; it's somehow shocking and obvious at the same time, in a "you can do that?!" sort of way ("Gödel's Proof" is a great introduction, if you're looking for one.)

Recursion is significant to programming, because it adds a tremendous amount of expressive power to a programming language (for a price!) Consequently, it's significant that you can implement recursion in the untyped Lambda Calculus using the Y combinator.

Real-world recursion (or: are we really gonna use this?)

I said at the beginning of this article that it'd be a little more computationally oriented, but admittedly we have mostly focused on the theory of computation. I can imagine a decent fraction of you saying (if I may put words in your mouth): "Matt, every time we press you on how to use this stuff, you give us a history lesson. That's all very interesting, but can we apply the Y combinator right now? Will it help us structure our code? Will it make us better at our jobs? Will knowing about it save us time or effort?"

I confess that I've been dodging a bit, because the answer to these questions is: not really. Sorry. While of great theoretical interest, the Y combinator is just not useful for practical programming (at least in my opinion.)

Really? Don't language designers use it?

Well, remember that you need the Y combinator to do recursion in languages which don't have named functions. It's important that we know this is possible and how to do it (it proves, for example, that named functions are not in any way an "essential" part of computation), but there's no reason not to use named functions in practice; they just make everything (including recursion) much easier. When your function has a name, it can just call itself by name to recur - no other special mechanisms are required. The vast majority of languages used in practice used named functions to implement recursion, including popular functional languages like Haskell and Clojure.

You can't think of a single use case?

The only argument I've encountered for using the actual Y combinator in practice is to implement a functional variant of open recursion. This post (particularly part 2) gives a good summary of the technique (another brief discussion can be found in this paper.)

Open recursion is, loosely, a technique in which you "modify" a recursive function at runtime to change the function that is the target of the recursive call. To make this more concrete, using open recursion you can, for example, wrap a recursive function without having to wrap the recursive calls too. For example, let's say you had a function called isEven and you wanted to memoize it. Without the Y combinator, you could write:

const isEven = memoize((num) => {

if (num == 1) {

return true;

} else if (num == 0) {

return false;

} else {

return memoize(isEven)(num - 2);

}

})

With the Y combinator you can write something like:

const isEven = Y(memoize((recurse, num) => {

if (num == 1) {

return true;

} else if (num == 0) {

return false;

} else {

return recurse(num - 2));

}

})

You only write memoize once, around the outer function. You can even use this technique to wrap the function multiple times.

That's the argument, anyway. I understand and appreciate the idea, especially on a theoretical level, but:

let isEven = (num) => false;

const _isEven = (num) => {

if (num == 1) {

return true;

} else if (num == 0) {

return false;

} else {

return isEven(num - 2);

}

}

isEven = memoize(_isEven);This is smaller and simpler than the Y combinator, and it works just fine. If you have late binding at your disposal (which you usually do if you're using a modern programming language) you basically get open recursion for free.

So it's just not relevant to professional programmers?

You could look at it that way. But I think it's still worth knowing about, because the idea attached to it - that general recursion is the fundamental "stuff" of computation - shows up in practice all the time. If you've used any pure functional language at all, you know firsthand that recursion is how you get stuff done. There are no loops or other special control structures in these languages, so you simply can't do anything interesting without relying on recursion (even things like lists are, in theory, defined recursively, although based on the language the runtime may implement them imperatively to speed things up.)

Knowing that recursion is fundamental - and understanding it deeply, and learning to think recursively on an instinctual level - is very important, and probably will pay dividends in your career, even if you're not a hardcore functional programmer.

Bonus 1: Alternate fixpoints

In part one I mentioned that the Y combinator is a fixpoint combinator, but not the fixpoint combinator. There are many other fixpoint combinators (infinitely many, in fact), with various properties both practical and esoteric. A great example is the Z combinator, which as the name suggests is only a little bit different from the Y combinator.

I just discussed whether it was worth using the Y combinator in practice; but what exactly would it look like to implement the Y combinator in a language like Python or Javascript? We just have to translate all the components of the lambda calculus term into the new language, right?

const y = (f) => {

const inner = (x) => f(x(x));

return inner(inner);

}As you've probably guessed, no. The issue is that Javascript does not have lazy evaluation, it uses eager evaluation. This means that all of the parameters to a function must be completely evaluated before that function is called.

Note we're assumingfis curried here, i.e.f(f)returns a function that accepts one argument. The way you'd callfwith two arguments isf(arg1)(arg2).

This really throws a wrench in the works when we try to evaluate inner(inner). To evaluate inner(inner) we need to evaluate f(inner(inner)), which means we need to evaluate inner(inner)...

So, the Y combinator will flat out never terminate in languages with eager evaluation. But we can work around this as long as we assume f takes another argument (which it needs to if we're trying to implement terminating recursion.)

If you'll recall from last time, x(x) is the place that y(f) replicates itself. If you'll recall from this time, things worked out with lazy evaluation because we only replicated \(Y(f)\) when we absolutely needed to - i.e when we needed to make a recursive call to \(f\).

We need to make this implicit behavior implicit. f is expecting a callback that it can use to make the recursive call - i.e. a function that accepts a single argument. x(x) would produce such a function (namely, y(f)), but we can't evaluate it without getting stuck in a loop. So, instead, we'll create a new function that simply takes an arg, and passes that arg to x(x). This might sound like the same thing, but the important thing is that we can create this callback - (arg) => x(x)(arg) - without evaluating x(x). x(x) only gets evaluated when need to make the next recursive call to f, just like we wanted.

A straightforward change produces our Z combinator:

const z = (f) => {

const inner = (x) => {

const recurse = (arg) => x(x)(arg);

return f(recurse);

};

return inner(inner);

}When people talk about implementing the Y combinator in languages like Python or Javascript, usually they're actually talking about implementing the Z combinator!

If you're curious, in the lambda calculus the Z combinator looks like this:

$$ Z = \lambda f. \enspace (\lambda x. \enspace f(\lambda v. \enspace x \enspace x \enspace v)) \enspace (\lambda x. \enspace f(\lambda v. \enspace x \enspace x \enspace v)) $$

Bonus 2: Y Combinator, not the Y combinator

As silly as it sounds, it's almost certainly the case that you would not be hearing so much about the Y combinator if Paul Graham hadn't named his accelerator after it in 2005. So why is it called Y Combinator, anyway?

The historical record appears to be thin; my guess is that it came up during brainstorming and just had a certain ring to it (I did find one recent Paul Graham post referring to the Y Combinator as "one of the coolest tricks in the lambda calculus." I'm sure if this post makes its way to Hacker News there will be plenty of people who can weigh in!)

The official answer: "[The Y combinator is] a program that runs programs; we're a company that helps start companies."

Now you know!